Streamlining Model Deployment: How AI Legalese Decoder Empowers Aspiring Full-Stack Data Scientists

- January 5, 2025

- Posted by: legaleseblogger

- Category: Related News

legal-document-to-plain-english-translator/”>Try Free Now: Legalese tool without registration

Introduction to Model Development and Deployment

In this section, we will delve into the foundational aspects of model development, specifically focusing on the nuances of model deployment. It is essential to highlight that our prime objective is not to optimize the model’s performance at this stage. Instead, our goal is to construct a straightforward model that incorporates limited features. This will allow us to concentrate effectively on the vital process of deploying the model.

Objective of the Model

In our illustrative example, we aim to predict the salary of data professionals based on various factors. These features interface with our prediction model, incorporating crucial variables such as experience level, job title, company size, and possibly others that can affect salary outcomes. The understanding we gain from this example will serve as a robust foundation for later, more complex models requiring enhanced performance tuning.

Data Source

For this project, we are leveraging a public dataset to inform our model. You can access the data here: https://www.kaggle.com/datasets/ruchi798/data-science-job-salaries. The dataset, attributed to CC0, is in the public domain. For simplification purposes, minor modifications have been made to the dataset to limit the number of options available for specific features.

Required Libraries for Data Manipulation

To commence our project, we will import a series of libraries pivotal for efficient data manipulation and model building:

#import packages for data manipulation

import pandas as pd

import numpy as np

#import packages for machine learning

from sklearn import linear_model

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import OneHotEncoder, OrdinalEncoder

from sklearn.metrics import mean_squared_error, r2_score

#import packages for data management

import joblibData Inspection

Before diving into the modeling process, it is essential to familiarize ourselves with the dataset. Understanding the nature and structure of the data will guide us in choosing the best modeling approaches and features to include.

Data Visualization

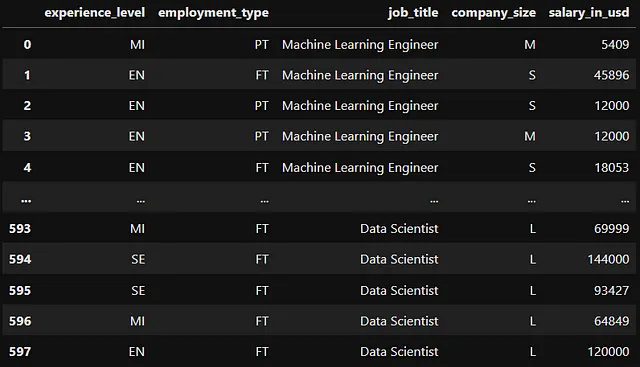

Here is a visual representation to assist in gathering insights from the data that we will be working with:

Image by Author

Data Preprocessing: Encoding Features

Given that our features are predominantly categorical, we will need to encode our data into a numerical format to make it suitable for the model. This step is crucial because machine learning algorithms typically require numeric input for computations.

Encoding Techniques

Below, we will utilize ordinal encoders to convert experience levels and company sizes into numerical representations. It’s important to recognize that these encodings reflect an implicit order (for example, 1 = entry level, 2 = mid-level, etc.).

For job titles and employment types, we will create dummy variables for each option while ensuring that the first category is dropped to prevent multicollinearity issues.

# Use ordinal encoder to encode experience level

encoder = OrdinalEncoder(categories=[['EN', 'MI', 'SE', 'EX']])

salary_data['experience_level_encoded'] = encoder.fit_transform(salary_data[['experience_level']])

# Use ordinal encoder to encode company size

encoder = OrdinalEncoder(categories=[['S', 'M', 'L']])

salary_data['company_size_encoded'] = encoder.fit_transform(salary_data[['company_size']])

# Encode employment type and job title using dummy columns

salary_data = pd.get_dummies(salary_data, columns=['employment_type', 'job_title'], drop_first=True, dtype=int)

# Drop original columns

salary_data = salary_data.drop(columns=['experience_level', 'company_size'])Preparing for Modeling

Once our model inputs have been transformed adequately, we can now segregate our data into training and testing sets. The primary objective here is to allow our model to learn from training data while leaving some data unseen for testing its predictive capabilities.

Implementing the Linear Regression Model

We will implement a linear regression model to predict employee salaries using the prepared features:

# Define independent and dependent features

X = salary_data.drop(columns='salary_in_usd')

y = salary_data['salary_in_usd']

# Split between training and testing sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, random_state=104, test_size=0.2, shuffle=True)

# Fit linear regression model

regr = linear_model.LinearRegression()

regr.fit(X_train, y_train)

# Make predictions

y_pred = regr.predict(X_test)

# Print the coefficients

print("Coefficients: \n", regr.coef_)

# Print the MSE

print("Mean squared error: %.2f" % mean_squared_error(y_test, y_pred))

# Print the adjusted R2 value

print("R2: %.2f" % r2_score(y_test, y_pred))Evaluating Model Performance

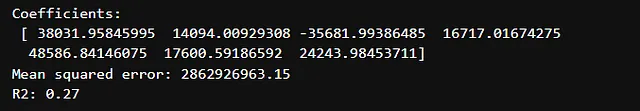

Let’s take a moment to assess how our model performs with the data at hand. We examine key metrics such as the R-squared value and mean squared error to gauge the effectiveness of our prediction:

Image by Author

The observed R-squared value is approximately 0.27, which indicates that there is substantial room for improvement. This suggests that we require more comprehensive data and potentially a richer set of features to construct a robust predictive model.

Model Persistence

Despite the need for enhancements, we will proceed to save our model for future use. Utilizing joblib for model persistence will ensure that the efforts made thus far are not lost:

# Save model using joblib

joblib.dump(regr, 'lin_regress.sav')How AI legalese decoder Can Help

In situations where contracts or legal agreements utilize technical language or complex terminologies, the AI legalese decoder can streamline the interpretation. This tool can ensure that stakeholders, including data professionals using predictive models, have clarity regarding the terms associated with data usage, privacy concerns, and compliance. By leveraging AI legalese decoder, users can address critical legal considerations surrounding model data handling and deployment, thereby minimizing potential liabilities and fostering transparent operations.

In summary, while we have traversed foundational elements of model development, the integration of tools like AI legalese decoder is vital for translating technical jargon into actionable insights, safeguarding legal compliance, and promoting informed decision-making.

legal-document-to-plain-english-translator/”>Try Free Now: Legalese tool without registration

****** just grabbed a

****** just grabbed a